The evolution of interest rates in the United States provides a fascinating insight into the nation’s economic history. From the establishment of the Federal Reserve in 1913, to the Great Depression, and through to the recent financial crisis, the fluctuation of interest rates tells a story of economic peaks and troughs. Interest rates, at their core, represent the cost of borrowing money and as such, they hold significant influence over economic activity, consumer behavior, and fiscal policy.

Throughout the 20th and 21st centuries, monetary policy – the Federal Reserve’s process of managing the money supply and interest rates – has used these rates as a tool to navigate economic recessions and manage inflation. For instance, in the early 1980s, interest rates reached an all-time high of nearly 20% as part of a strategy to combat stagflation. Conversely, in the aftermath of the 2008 financial crisis, interest rates were cut to near-zero to stimulate economic activity. Therefore, by examining the history of interest rates in the United States, a comprehensive understanding of the nation’s economic story can be obtained.

Introduction – Interest Rates

Transitioning from the broad context of global financial systems, it’s essential to delve into the specifics of interest rates in the United States. This topic necessitates a deep understanding of the intricate mechanisms of the US economy, which is greatly influenced by these rates.

Interest rates in the US are determined by the Federal Reserve, often referred to as the Fed. The Fed manipulates these rates to control inflation, stabilize the currency, and foster economic growth. For instance, during economic downturns, the Fed typically lowers interest rates to stimulate borrowing and spending, which in turn spurs economic activity.

In the United States, interest rates vary based on several factors. These include the type of financial product (such as loans or savings accounts), the term length (short-term or long-term), and the creditworthiness of the borrower. For example, a long-term loan typically has a higher interest rate than a short-term one due to the increased risk over a longer period.

Moreover, the fluctuations in interest rates significantly impact the economy. For instance, when interest rates rise, consumers tend to save more and spend less, which can slow economic growth. Conversely, lower interest rates encourage spending, stimulating economic activity. Thus, understanding the dynamics of interest rates in the US is crucial for comprehending the broader economic picture.

The early years of Interest Rates

Delving into the annals of history, the saga of interest rates in the United States unfurls like a riveting thriller, casting light on the nation’s economic evolution. The early years, spanning from the establishment of the First Bank of the United States in 1791 until the eve of World War I, saw a dynamic shift in interest rates largely driven by the financial needs of a young nation.

During this period, interest rates exhibited significant volatility. For instance, in 1791, the average interest rate stood at a staggering 8%. This high rate was primarily due to the country’s nascent economy and the inherent risks associated with lending to a new nation.

However, as the country progressed and the economy expanded, interest rates gradually declined. By 1913, on the eve of World War I, the average interest rate had dropped to a mere 3.84%. This represented a significant shift, indicative of the country’s economic maturation and stability.

In essence, the early years of interest rates in the United States served as a barometer of the nation’s economic health, reflecting the ups and downs of a burgeoning economy. It’s a testament to the resilience and determination of a nation committed to its economic advancement. This era ultimately set the stage for the complex financial system that exists today.

The 1920s

Transitioning from the foundation years, the 1920s ushered in a new era of financial stability in the United States. The decade, famously known as the Roaring Twenties, was characterized by unprecedented economic growth and prosperity.

Despite this vibrant economic atmosphere, the interest rates during the 1920s were relatively low. The Federal Reserve, established in 1913, adopted a laissez-faire policy, allowing the markets to dictate the interest rates. In 1921, the discount rate, which is the interest rate that the Federal Reserve charges commercial banks, was at a high of 7 percent. However, by 1929, this had dropped dramatically to 3.5 percent, a significant decrease that is often attributed to the economic boom of the period.

This low-interest-rate environment fostered a climate of easy credit and speculative investment, particularly in the stock market. Furthermore, the booming economy led to increased consumer spending, thereby stimulating economic activity. Yet, this period of economic prosperity did not last. The Great Depression of the 1930s, precipitated by the stock market crash of 1929, brought an abrupt end to the era of low interest rates and economic growth.

The 1930s

Moving from the roaring twenties, a period marked by prosperity and economic boom, the 1930s brought a stark contrast as it was characterized by significant economic turmoil and depression.

Interest rates in the United States during the 1930s were primarily influenced by the Great Depression. At the onset of the decade, the Federal Reserve, in an attempt to curb speculative stock market trading, raised the discount rate. However, this action had an unintended effect and contributed to the market crash in 1929.

In 1931, the central bank dramatically decreased the discount rate to stimulate economic activity. This rate reached a low of 1.5% in 1934 and remained under 2% for the majority of the decade. This was done in a bid to spur economic growth by making borrowing cheaper and encouraging investment.

However, even with these low rates, deflation was rampant, making the real cost of borrowing high. This deflationary environment created a vicious cycle where businesses were reluctant to borrow and invest, exacerbating the economic downturn.

The 1930s was a decade of exceptional economic distress, which resulted in the Federal Reserve adopting unorthodox monetary policies. It was a period defined by profound changes in the financial landscape, changes that would shape the direction of U.S. monetary policy for years to come.

The 1940s

Transitioning from the economic turmoil of the 1930s, the 1940s observed a remarkable shift in the economic landscape of the United States, particularly in the realm of interest rates. As the nation entered the decade, it was still recovering from the Great Depression, with interest rates hovering around 1-2%. This was an attempt by the Federal Reserve to stimulate economic growth by making borrowing less expensive.

However, the onset of World War II in 1941 marked a turning point. The U.S. government issued war bonds to fund military operations, which led to a surge in demand for these bonds. As a result, the effective Federal Funds rate dropped to nearly zero by the mid-1940s. This was a strategic move to make war bonds appealing to investors and stimulate economic activity.

Post-war, in 1948, the Federal Reserve started raising interest rates to control inflation, reaching up to 2% by the end of the decade. This marked the beginning of a long era of tight monetary policy that would carry on into the 1950s.

In conclusion, the 1940s were a decade of significant change for interest rates in the United States, guided largely by the economic demands of war and post-war recovery. This turbulent period set the stage for the monetary policies of the subsequent decades.

The 1950s

Emerging from the shadow of the 1940s, the 1950s ushered in a period of economic stability and unprecedented growth in the United States. In contrast to the previous decade’s fluctuation, interest rates during the 1950s were relatively stable, reflecting the nation’s strong economic health.

The decade started with the Federal Reserve maintaining a low-interest rate policy. The average interest rate in 1950 was a modest 2%. This approach was intended to stimulate economic growth and prevent a return to depression. It was successful in achieving this goal, with the Gross Domestic Product (GDP) growing by an impressive 37% throughout the decade.

However, the sustained growth led to inflationary pressures, necessitating a change in policy. In 1951, the Treasury-Federal Reserve Accord was signed, granting the Federal Reserve independence to adjust interest rates in response to inflation. By the end of the decade, the interest rate had risen to 4%, reflecting the increased emphasis on controlling inflation.

The 1950s also saw the introduction of credit cards, revolutionizing the way Americans borrowed money. The Diner’s Club card, introduced in 1950, and the American Express card, launched in 1958, allowed consumers to borrow money at a set interest rate, further facilitating economic growth.

The 1960s

Transitioning from the tranquil economic climate of the 1950s, the 1960s presented a stark contrast, embroiling the United States in an entanglement of fluctuating interest rates. The decade was characterized by a period of rapid economic growth and expansion, leading to an increasingly complex financial landscape.

The Federal Reserve, the central banking system of the United States, encountered several challenges during this era. On one hand, faced with the need to fund the escalating Vietnam War, the government found itself in a tight spot, grappling with the implications of a budget deficit. On the other hand, the Great Society programs, aimed at alleviating poverty and racial discrimination, required substantial financial support, further straining the economy.

The Federal Reserve responded to these economic complexities by adjusting interest rates to control inflation and stimulate economic growth. However, this led to a volatile financial environment characterized by unpredictable swings in interest rates. For instance, in 1960, the Federal Reserve raised the interest rate to a high of 4.03%, only to see it drop drastically to 1.20% by 1961. This instability reflected the challenges faced by the Federal Reserve in effectively managing the economy and maintaining stability amidst the financial tumult of the 1960s.

The 1970s

Transitioning from the financially tumultuous 1960s, the 1970s presented a new set of economic challenges, particularly in the realm of interest rates in the United States. This decade was marked by a phenomenon described as stagflation – a condition of slow economic growth, high unemployment, and high inflation.

In an attempt to combat these issues, the Federal Reserve took a bold step of raising interest rates. The average interest rate for a 30-year fixed mortgage in 1971 was approximately 7.54%. By 1979, this figure had risen dramatically to 11.2%. The inflation rate also soared, reaching a high of 13.55% in 1979.

The 1970s also saw the implementation of monetary policies aimed at stabilizing the economy. For instance, under the chairmanship of Arthur Burns, the Federal Reserve implemented a series of contractionary policies in 1974, which included a hike in the federal funds rate to a record 13%.

These high interest rates had a significant impact on various sectors of the economy. Homeownership became increasingly difficult due to the high mortgage rates. Businesses, on the other hand, faced increased borrowing costs, leading to reduced investments and slow growth.

The 1980s

Transitioning from the tumultuous economic landscape of the 1970s, the 1980s ushered in a new era of financial policy that would dramatically impact the country’s economic trajectory. The decade of the 80s is most notably remembered for the drastic shifts in interest rates that occurred within the United States.

The decade began with the Federal Reserve, under the leadership of Paul Volcker, raising interest rates to unprecedented levels in a bid to combat inflation. The Federal funds rate skyrocketed to a record high of 20 percent in June 1981, a move designed to stabilize the economy by curbing rampant inflation.

However, this policy was not without its repercussions. While it successfully reined in inflation, it also triggered a severe recession in the early 1980s. Unemployment soared to nearly 10.8 percent in 1982, the highest rate since the Great Depression.

As the decade progressed, interest rates began a steady decline. By the end of the decade, the Federal funds rate had dropped to around 8.10 percent, reflecting a return to economic stability. Yet, the legacy of the 1980s’ high interest rates continued to shape the country’s economic policies and strategies well into subsequent decades.

This era of high interest rates remains one of the most significant and influential periods in the history of the United States’ economic policy.

The 1990s

Transitioning from the 1980s, the 1990s presented a transformative era in the landscape of the United States’ interest rates. This decade was marked by significant fluctuations in rates, influenced by various economic factors and policy decisions.

The early part of the decade saw a peak in interest rates, with the Federal Reserve hiking rates to curb inflation. In 1990, the federal funds rate stood at a notable 8.10%, reflecting the Fed’s aggressive stance on inflation. However, this period of high interest rates was short-lived. By the mid-1990s, rates began to drop steadily, reaching a low of 3.00% in 1993, the lowest since the 1960s. This decrease was a result of the Federal Reserve’s policy shift towards stimulating economic growth.

Nonetheless, this period of low interest rates also had its drawbacks. The decrease in rates led to a surge in borrowing, which contributed to the economic bubble of the late 1990s. The bubble eventually burst, leading to the dot-com crash of 2000 and a subsequent recession.

In summary, the 1990s can be characterized as a decade of substantial interest rate changes in the United States. The period was marked by the Federal Reserve’s shifting policies, which had significant impacts on the economy.

The 2000s

Moving from the financial landscape of the 1990s, The 2000s ushered in a new era of interest rate activity in the United States. This decade was marked by much volatility, largely due to the economic upheaval caused by the bursting of the dot-com bubble, the 9/11 attacks, and the housing market collapse leading to the Great Recession.

In the early 2000s, the Federal Reserve, under the leadership of Chairman Alan Greenspan, implemented a series of aggressive rate cuts to stimulate the economy. The Federal Funds rate, a key benchmark for short-term interest rates, was reduced from over 6% in 2000 to just 1% by 2003. These cuts were a response to the recession triggered by the dot-com bubble burst and the subsequent downturn in the stock market.

As the economy began to recover, interest rates started to rise. By 2006, the Federal Reserve had increased the Federal Funds rate to over 5%. However, the tightening of monetary policy contributed to the housing bubble collapse, which marked the beginning of the Great Recession by the end of the decade. In response, the Federal Reserve cut rates dramatically, with the Federal Funds rate reaching near zero by the end of 2008.

This tumultuous period underscores the critical role interest rates play in the stability and growth of the economy.

The 2010s

Turning the page from the 2000s, the following decade, the 2010s, witnessed a significant shift in the interest rates landscape in the United States. Post the Great Recession of the late 2000s, the US Federal Reserve employed a near-zero interest rate policy (ZIRP) in an attempt to stimulate economic growth.

From the end of 2008 to late 2015, the Federal Funds Rate, a pivotal interest rate in U.S. financial markets, hovered around 0 to 0.25 percent, an unprecedented low. The rationale for this policy was to make borrowing cheaper, thus encouraging investment and consumption, the primary engines of economic growth.

However, as the US economy began to recover, the Federal Reserve started a gradual tightening cycle in December 2015, increasing the Federal Funds Rate bit by bit until it reached just under 2.5 percent by the end of 2018. This phase marked a significant shift in monetary policy, moving from a crisis response mode to a more normal monetary policy environment.

Yet, by the end of the decade, in response to economic uncertainties and to cushion the economy, the Federal Reserve again started lowering rates. By the close of the decade, the Federal Funds Rate stood at 1.5-1.75 percent.

The 2020s and beyond

Emerging from the shadow of the 2010s, the United States entered a new decade, the 2020s. A time of profound economic disruption and uncertainty, marked by the global COVID-19 pandemic. It is in this context, the understanding of interest rates in the United States becomes crucial.

The Federal Reserve, the United States’ central bank, responded to the economic fallout of the pandemic by cutting its benchmark interest rate to nearly zero in March 2020. This action, designed to stimulate economic activity, was an echo of the policy response to the 2008 global financial crisis.

However, this era also brought in unique challenges. For instance, the threat of inflation re-emerged as a significant concern for the first time in decades. Amidst rising prices and supply chain disruptions, the Federal Reserve signaled in 2021 that interest rate hikes could be on the horizon. This was intended to curb inflation and ensure economic stability.

Notably, the 2020s also saw the advent of digital currencies and their potential impact on interest rates. As these currencies gain popularity, the Federal Reserve and other central banks are grappling with how to incorporate them into monetary policy.

2022 to 2023 interest rates increases

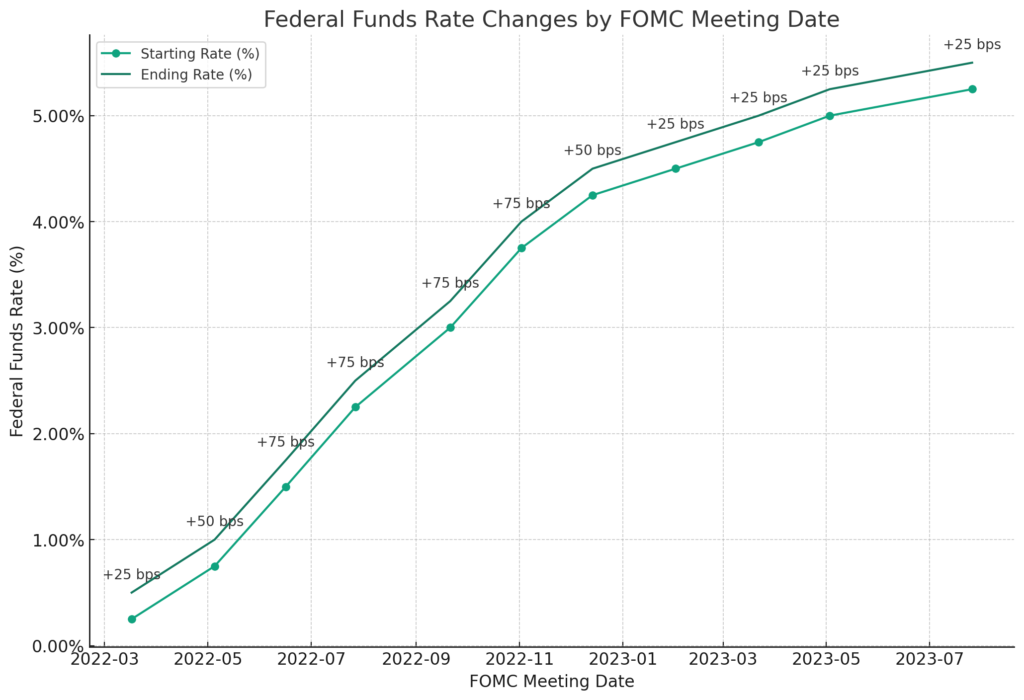

In the years 2022 and 2023, the Federal Reserve took aggressive measures to increase interest rates. The upward trajectory commenced on March 17, 2022, with a slight increment of 25 basis points. By the end of 2022, the Federal Funds Rates had climbed significantly, settling between 4.25% and 4%. The objective of these aggressive measures was two-fold.

Primarily, the Federal Reserve aimed to curb inflation, which had been on an upward trend, significantly impacting the economy. Secondly, it sought to stabilize financial markets that had been experiencing increased volatility. This approach was not without its risks, as it could potentially slow down economic growth. However, the Federal Reserve’s decisive action underscores its commitment to ensuring economic stability in the long term. The effects of these interest rate hikes are still unfolding.

- The blue line with circle markers represents the starting rate before each FOMC meeting.

- The orange line with ‘x’ markers represents the ending rate after each FOMC meeting.

- Each point on the orange line is annotated with the corresponding rate change in basis points (bps).

More on Interest Rates

- What were the interest rates in 1995 (USA)?

- What were the interest rates in 2000 (USA)?

- What were the interest rates in 2005 (USA)?

- What were the interest rates in 2010 (USA)?

- What were the interest rates in 2011 (USA)?

- What were the interest rates in 2012 (USA)?

- What were the interest rates in 2014 (USA)?

- What were the interest rates in 2015 (USA)?

- What were the interest rates in 2016 (USA)?

- What were the interest rates in 2018 (USA)?

- What were the interest rates in 2019 (USA)?

- What were the interest rates in 2020 (USA)?

- What were the interest rates in 2021 (USA)?

- What were the interest rates in 2022 (USA)?

- What were the interest rates in 2023 (USA)?

- What were the interest rates in the USA in 2017?

Conclusion

Understanding the historical trajectory of interest rates in the United States provides critical insights into the nation’s economic health and monetary policies. From the early years to the present, fluctuations in rates have been indicative of the country’s responses to various economic challenges and opportunities.

Moving into the 2020s and beyond, it remains crucial to monitor interest rate trends as they continue to shape key aspects of the economy. In an ever-evolving economic landscape, the role of interest rates is undeniably pivotal in strategic financial decision-making.